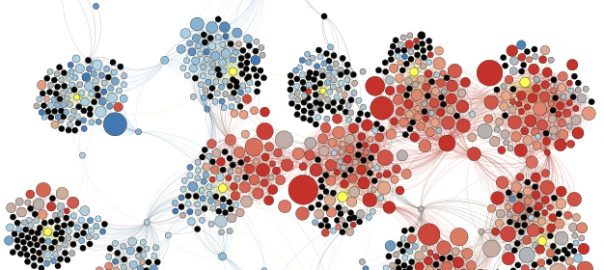

Our latest paper "Neutral bots probe political bias on social media" by Wen Chen, Diogo Pacheco, Kai-Cheng Yang & Fil Menczer just came out in Nature Communications. We find strong evidence of political bias on Twitter, but not as many think: (1) it is conservative rather than liberal bias, and (2) it results from user interactions (and abuse) rather than platform algorithms. We tracked neutral "drifter" bots to probe political biases. In the figure, we see the drifters in yellow and a sample of their friends and followers colored according to political alignment. Large nodes are accounts sharing a lot of low-credibility links.

The news and information to which US Twitter users are exposed depend strongly on the political leaning of their early connections. The interactions of conservative accounts are skewed toward the right, whereas liberal accounts are exposed to moderate content toward the political center. Partisan accounts, especially conservative ones, tend to receive more followers and follow more automated accounts. Conservative accounts also find themselves in denser "echo-chamber" communities and are exposed to more low-credibility content. We are grateful to Knight Foundation and Craig Newmark for supporting our research. And also to Paul Cheung for inspiring this project. More information can be found in the IU press release.