We study the structure and dynamics of Web traffic and social media usage patterns. One source of data is a stream of HTTP requests made by users at Indiana University (our Web traffic (click) dataset is available!). Gathering anonymized requests directly from the network allows us to examine large volumes of traffic data while minimizing biases associated with other data sources. However, we also leverage data from server logs and browser instrumentation. Referrer information is used to reconstruct the subset of the Web graph actually traversed by users.

We study the structure and dynamics of Web traffic and social media usage patterns. One source of data is a stream of HTTP requests made by users at Indiana University (our Web traffic (click) dataset is available!). Gathering anonymized requests directly from the network allows us to examine large volumes of traffic data while minimizing biases associated with other data sources. However, we also leverage data from server logs and browser instrumentation. Referrer information is used to reconstruct the subset of the Web graph actually traversed by users.

Our goal is to develop a better understanding of user behavior online and creating more realistic models of Web and social media browsing. The potential applications of this analysis include improved designs for networks, sites, and server software; more accurate forecasting of traffic trends; classification of sites based on the patterns of activity they inspire; and improved ranking algorithms for search results.

Among our more intriguing findings are that server traffic (as measured by number of clicks) and site popularity (as measured by distinct users) both follow distributions so broad that they lack any well-defined mean. Actual Web traffic turns out to violate three assumptions of the random surfer model: users don’t start from any page at random, they don’t follow outgoing links with equal probability, and their probability of jumping is dependent on their current location. Search engines appear to be directly responsible for a smaller share of Web traffic than often supposed. These results were presented at WSDM2008 (paper | talk).

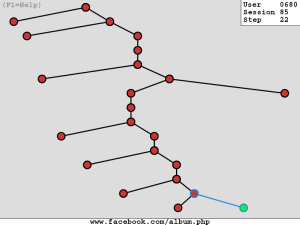

Another paper (also here; presented at Hypertext 2009) examined the conventional notion of a Web session as a sequence of requests terminated by an inactivity timeout. Such a definition turns out to yield statistics dependent primarily on the timeout value selected, which we find to be arbitrary. For that reason, we have proposed logical sessions defined by the target and referrer URLs present in a user’s Web requests.

Inspired by these findings, we designed a model of Web surfing able to recreate not only the broad distribution of traffic, but also the basic statistics of logical sessions. Late breaking results were presented at WSDM2009. Our final report in the ABC model was presented at WAW 2010.

Recent efforts aim to develop a general model of information foraging that could help understand how people decide when to browse, search, switch, or stop while consuming information, entertainment, and other resources online.

Project Participants

Support

Mark Meiss was supported by the Advanced Network Management Laboratory, one of the Pervasive Technology Labs established at Indiana University with the assistance of the Lilly Endowment.

This research was also supported in part by the National Science Foundation (under awards 0348940, 0513650, and 0705676) and in part by the Institute for Information Infrastructure Protection research program. The I3P is managed by Dartmouth College and supported under Award Number 2003-TK-TX-0003 from the U.S. DHS, Science and Technology Directorate.

Opinions, findings, conclusions, recommendations or points of view of this group are those of the authors and do not necessarily represent the official position of the U.S. Department of Homeland Security, Science and Technology Directorate, I3P, National Science Foundation, or Indiana University.